Join our workshop on Dealing with Duplicate Data in R, which is a part of our workshops for Ukraine series!

Here’s some more info:

Title: Dealing with Duplicate Data in R

Date: Thursday, April 25th, 18:00 – 20:00 CET (Rome, Berlin, Paris timezone)

Speaker: Erin Grand works as a freelancer and Data Scientist at TRAILS to Wellness. Before TRAILS, she worked as a Data Scientist at Uncommon Schools, Crisis Text Line, and a software programmer at NASA. In the distant past, Erin researched star formation and taught introductory courses in astronomy and physics at the University of Maryland. In her free time, Erin enjoys reading, Scottish country dancing, and singing loudly to musical theatre.

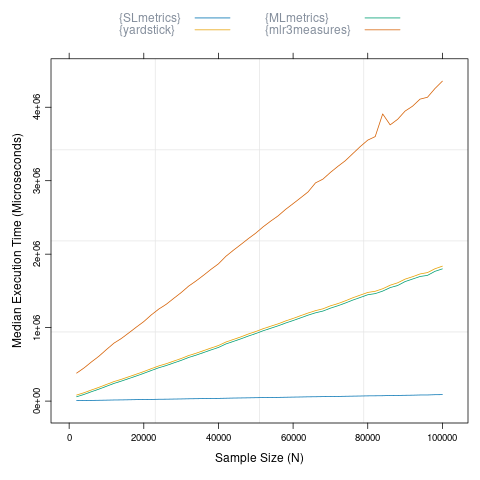

Description: Maintaining high data quality is essential for accurate analyses and decision-making. Unfortunately, high data quality is often hard to come by. This talk will focus on some “how-tos” of cleaning data and removing duplicates to enhance data integrity. We’ll go over common data quality issues, how to use the {{janitor}} package to identify and remove duplicates, and business practices that can help prevent data issues from happening in the first place.

Minimal registration fee: 20 euro (or 20 USD or 800 UAH)

Please note that the registration confirmation is sent 1 day before the workshop to all registered participants rather than immediately after registration

How can I register?

- Go to https://bit.ly/3wvwMA6 or https://bit.ly/4aD5LMC or https://bit.ly/3PFxtNA and donate at least 20 euro. Feel free to donate more if you can, all proceeds go directly to support Ukraine.

- Save your donation receipt (after the donation is processed, there is an option to enter your email address on the website to which the donation receipt is sent)

- Fill in the registration form, attaching a screenshot of a donation receipt (please attach the screenshot of the donation receipt that was emailed to you rather than the page you see after donation).

If you are not personally interested in attending, you can also contribute by sponsoring a participation of a student, who will then be able to participate for free. If you choose to sponsor a student, all proceeds will also go directly to organisations working in Ukraine. You can either sponsor a particular student or you can leave it up to us so that we can allocate the sponsored place to students who have signed up for the waiting list.

How can I sponsor a student?

- Go to https://bit.ly/3wvwMA6 or https://bit.ly/4aD5LMC or https://bit.ly/3PFxtNA and donate at least 20 euro (or 17 GBP or 20 USD or 800 UAH). Feel free to donate more if you can, all proceeds go to support Ukraine!

- Save your donation receipt (after the donation is processed, there is an option to enter your email address on the website to which the donation receipt is sent)

- Fill in the sponsorship form, attaching the screenshot of the donation receipt (please attach the screenshot of the donation receipt that was emailed to you rather than the page you see after the donation). You can indicate whether you want to sponsor a particular student or we can allocate this spot ourselves to the students from the waiting list. You can also indicate whether you prefer us to prioritize students from developing countries when assigning place(s) that you sponsored.

If you are a university student and cannot afford the registration fee, you can also sign up for the waiting list here. (Note that you are not guaranteed to participate by signing up for the waiting list).

You can also find more information about this workshop series, a schedule of our future workshops as well as a list of our past workshops which you can get the recordings & materials here.

Looking forward to seeing you during the workshop!