Press HERE to register to the ODSC West 2019 conference with a 30% discount! (or use the code: ODSCRBloggers)

R is one of the most commonly-used languages within data science, and its applications are always expanding. From the traditional use of data or predictive analysis, all the way to machine or deep learning, the uses of R will continue to grow and we’ll have to do everything we can to keep up.

To help those beginning their journey with R, we have made strides to bring some of the best possible talks and workshops ODSC West 2019 to make sure you know how to work with R.

At the talk “Machine Learning in R” by Jared Lander of Columbia Business School and author of R for Everyone, you will go through the basic steps to begin using R for machine learning. He’ll start out with the theory behind machine learning and the analyzation of model quality, before working up to the technical side of implementation. Walk out of this talk with a solid, actionable groundwork of machine learning.

After you have the groundwork of machine learning with R, you’ll have a chance to dive even deeper, with the talk “Causal Inference for Data Science” by Data Scientist at Coursera, Vinod Bakthavachalam. First he’ll present an overview of some causal inference techniques that could help any data scientist, and then will dive into the theory and how to perform these with R. You’ll also get an insight into the recent advancements and future of machine learning with R and causal inference. This is perfect if you have the basics down and want to be pushed a little harder.

Read ahead on this topic with Bakthavachalam’s speaker blog here.

Here, you’ll get to implement all you’ve learned by learning some of the most popular applications of R. Joy Payton, the Supervisor of Data Education at Children’s Hospital of Philadelphia will give a talk on “Mapping Geographic Data in R.” It’s a hands-on workshop where you’ll leave having gone through the steps of using open-source data, and applying techniques in R into real data visualization. She’ll leave you will the skills to do your own mapping projects and a publication-quality product.

Throughout ODSC West, you’ll have learned the foundations, the deeper understanding, and visualization in popular applications, but the last step is to learn how to tell a story with all this data. Luckily, Paul Kowalczyk, the Senior Data Scientist at Solvay, will be giving a talk on just that. He knows that the most important part of doing data science is making sure others are able to implement and use your work, so he’s going to take you step-by-step through literate computing, with particular focus on reporting your data. The workshop shows you three ways to report your data: HTML pages, slides, and a pdf document (like you would submit to a journal). Everything will be done in R and Python, and the code will be made available.

We’ve tried our best to make these talks and workshops useful to you, taking you from entry-level to publication-ready materials in R. To learn all of this and even more—and be in the same room as hundreds of the leading data scientists today—sign up for ODSC West. It’s hosted in San Francisco (one of the best tech cities in the world) from October 29th through November 1st.

Press HERE to register to the ODSC West 2019 conference with a 30% discount! (or use the code: ODSCRBloggers)

Category: R

Coke vs. Pepsi? data.table vs. tidy? Part 2

By Beth Milhollin, Russell Zaretzki, and Audris Mockus

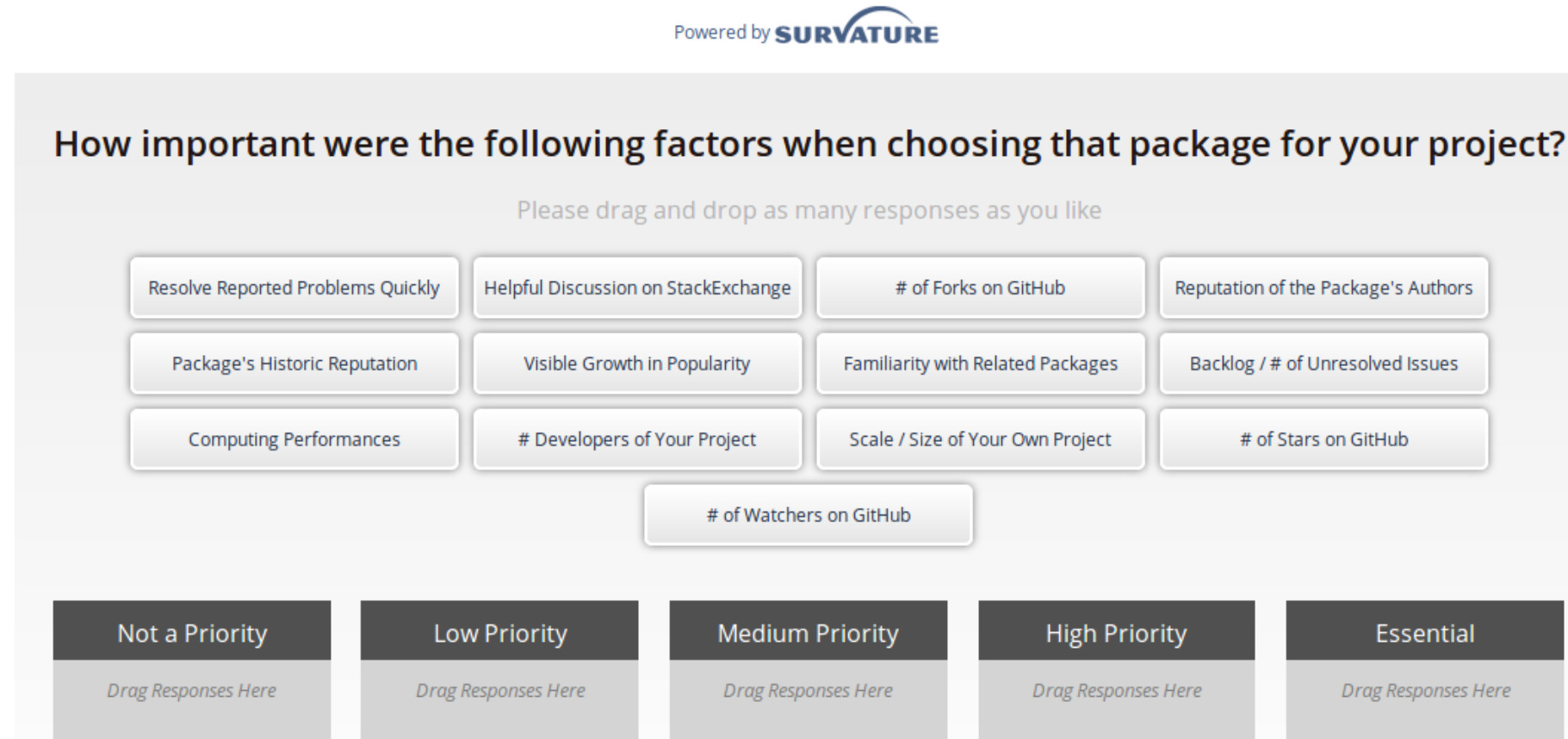

Coke vs. Pepsi is an age-old rivalry, but I am from Atlanta, so it’s Coke for me. Coca-Cola, to be exact. But I am relatively new to R, so when it comes to data.table vs. tidy, I am open to input from experienced users. A group of researchers at the University of Tennessee recently sent out a survey to R users identified by their commits to repositories, such as GitHub. The results of the full survey can be seen here. The project contributors were identified as “data.table” users or “tidy” users by their inclusion of these R libraries in their projects. Both libraries are an answer to some of the limitations associated with the basic R data frame. In the first installment of this series (found here) we used the survey data to calculate the Net Promoter Score for data.table and tidy. To recap, the Net Promoter Score (NPS) is a measure of consumer enthusiasm for a product or service based on a single survey question – “How likely are you to recommend the brand to your friends or colleagues, using a scale from 0 to 10?” Detractors of the product will respond with a 0-6, while promoters of the product will offer up a 9 or 10. A passive user will answer with a score of 7 or 8. To calculate the NPS, subtract the percentage of detractors from the percentage of promoters. When the percentage of promoters exceeds the percentage of detractors, there is potential to expand market share as the negative chatter is drowned out by the accolades. We were surprised when our survey results indicated data.table had an NPS of 28.6, while tidy’s NPS was double, at 59.4. Why are tidy user’s so much more enthusiastic? What do tidy-lovers “love” about their dataframe enhancement choice? Fortunately, a few of the other survey questions may offer some insights. The survey question shown below asks the respondents how important 13 common factors were when selecting their package. Respondents select a factor-tile, such as “Package’s Historic Reputation”, and drag it to the box that presents the priority that user places on that factor. A user can select/drag as many or as few tiles as they choose.

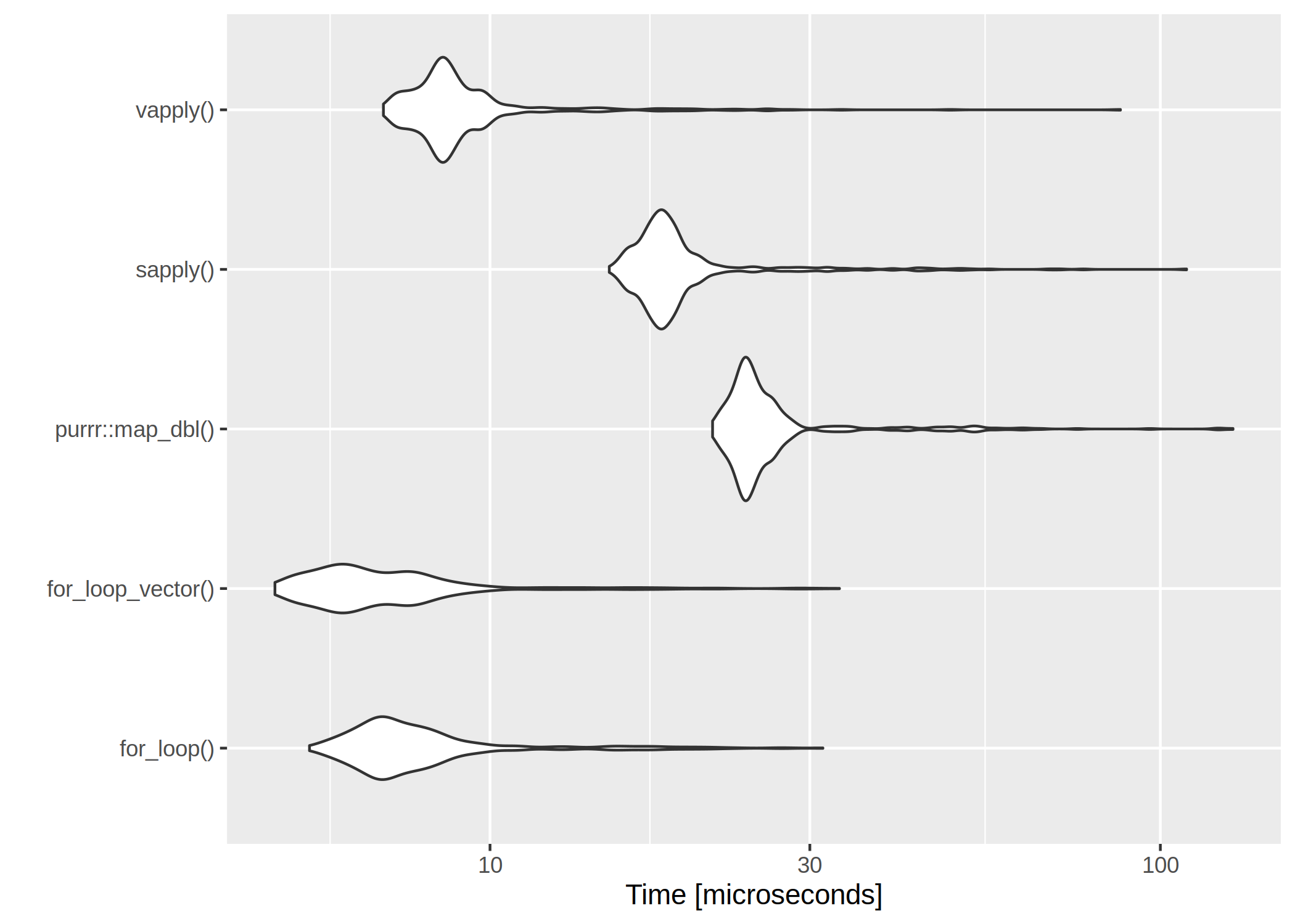

Computation time of loops — for, *apply, map

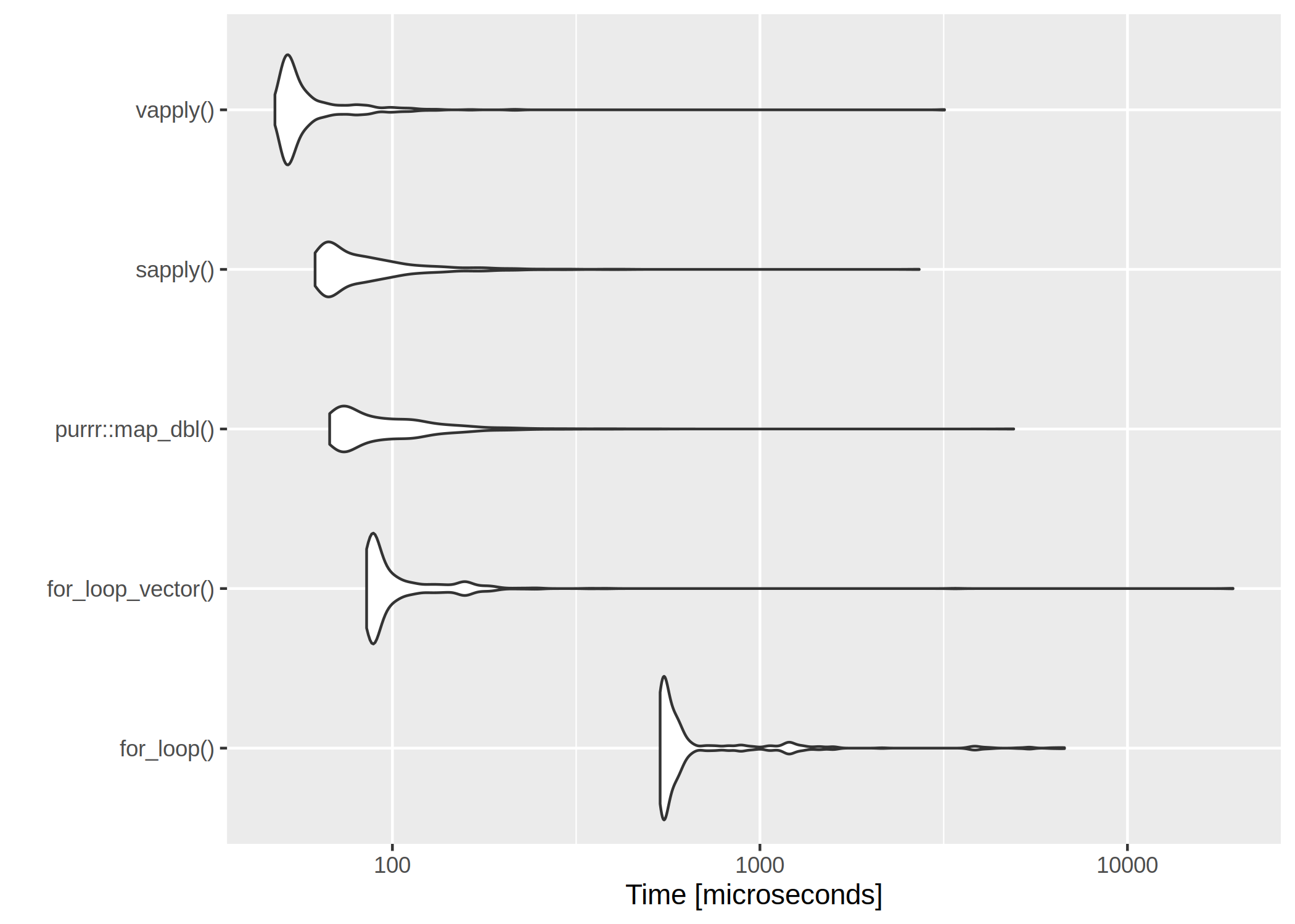

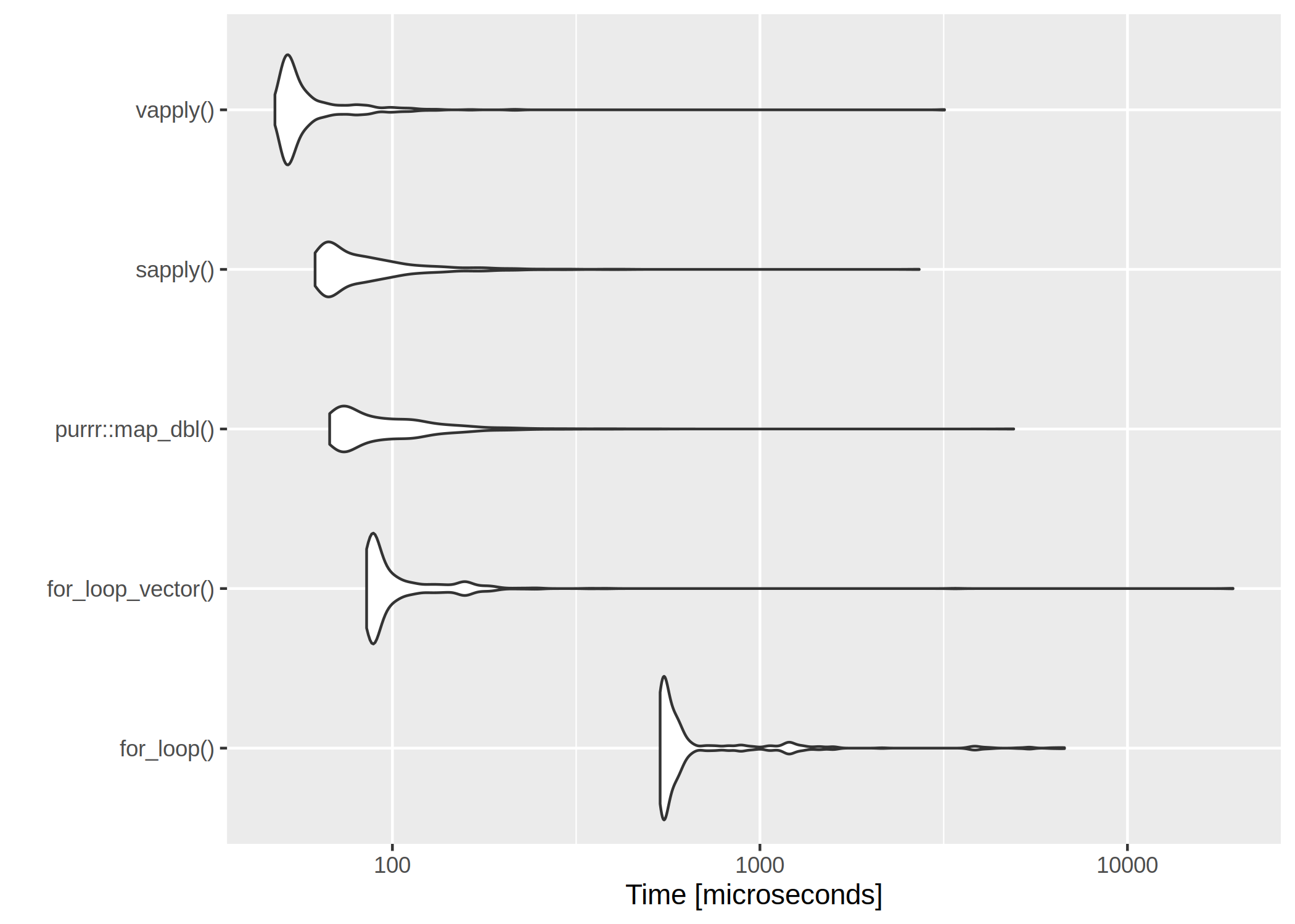

It is usually said, that for– and while-loops should be avoided in R. I was curious about just how the different alternatives compare in terms of speed.

The first loop is perhaps the worst I can think of – the return vector is initialized without type and length so that the memory is constantly being allocated.

The clear winner is vapply() and for-loops are rather slow. However, if we have a very low number of iterations, even the worst for-loop isn’t too bad:

The clear winner is vapply() and for-loops are rather slow. However, if we have a very low number of iterations, even the worst for-loop isn’t too bad:

use_for_loop <- function(x){

y <- c()

for(i in x){

y <- c(y, x[i] * 100)

}

return(y)

}

The second for loop is with preallocated size of the return vector.

use_for_loop_vector <- function(x){

y <- vector(mode = "double", length = length(x))

for(i in x){

y[i] <- x[i] * 100

}

return(y)

}

I have noticed I use sapply() quite a lot, but I think not once have I used vapply() We will nonetheless look at both

use_sapply <- function(x){

sapply(x, function(y){y * 100})

}

use_vapply <- function(x){

vapply(x, function(y){y * 100}, double(1L))

}

And because I am a tidyverse-fanboy we also loop at map_dbl().

library(purrr)

use_map_dbl <- function(x){

map_dbl(x, function(y){y * 100})

}

We test the functions using a vector of random doubles and evaluate the runtime with microbenchmark.

x <- c(rnorm(100)) mb_res <- microbenchmark::microbenchmark( `for_loop()` = use_for_loop(x), `for_loop_vector()` = use_for_loop_vector(x), `purrr::map_dbl()` = use_map_dbl(x), `sapply()` = use_sapply(x), `vapply()` = use_vapply(x), times = 500 )The results are listed in table and figure below.

| expr | min | lq | mean | median | uq | max | neval |

|---|---|---|---|---|---|---|---|

| for_loop() | 8.440 | 9.7305 | 10.736446 | 10.2995 | 10.9840 | 26.976 | 500 |

| for_loop_vector() | 10.912 | 12.1355 | 13.468312 | 12.7620 | 13.8455 | 37.432 | 500 |

| purrr::map_dbl() | 22.558 | 24.3740 | 25.537080 | 25.0995 | 25.6850 | 71.550 | 500 |

| sapply() | 15.966 | 17.3490 | 18.483216 | 18.1820 | 18.8070 | 59.289 | 500 |

| vapply() | 6.793 | 8.1455 | 8.592576 | 8.5325 | 8.8300 | 26.653 | 500 |

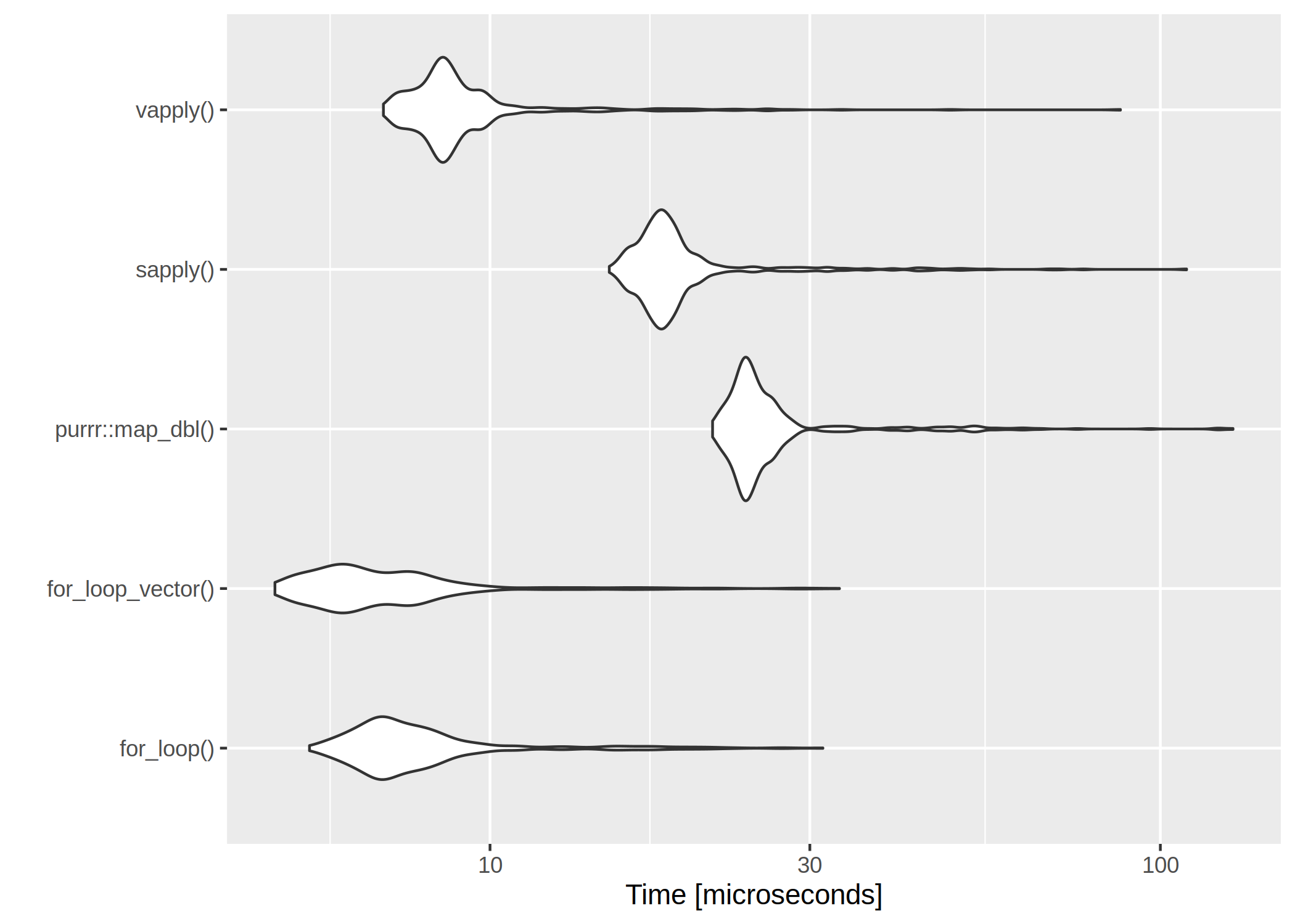

The clear winner is vapply() and for-loops are rather slow. However, if we have a very low number of iterations, even the worst for-loop isn’t too bad:

The clear winner is vapply() and for-loops are rather slow. However, if we have a very low number of iterations, even the worst for-loop isn’t too bad:

x <- c(rnorm(10)) mb_res <- microbenchmark::microbenchmark( `for_loop()` = use_for_loop(x), `for_loop_vector()` = use_for_loop_vector(x), `purrr::map_dbl()` = use_map_dbl(x), `sapply()` = use_sapply(x), `vapply()` = use_vapply(x), times = 500 )

| expr | min | lq | mean | median | uq | max | neval |

|---|---|---|---|---|---|---|---|

| for_loop() | 5.992 | 7.1185 | 9.670106 | 7.9015 | 9.3275 | 70.955 | 500 |

| for_loop_vector() | 5.743 | 7.0160 | 9.398098 | 7.9575 | 9.2470 | 40.899 | 500 |

| purrr::map_dbl() | 22.020 | 24.1540 | 30.565362 | 25.1865 | 27.5780 | 157.452 | 500 |

| sapply() | 15.456 | 17.4010 | 22.507534 | 18.3820 | 20.6400 | 203.635 | 500 |

| vapply() | 6.966 | 8.1610 | 10.127994 | 8.6125 | 9.7745 | 66.973 | 500 |

How to Perform Ordinal Logistic Regression in R

In this article, we discuss the basics of ordinal logistic regression and its implementation in R. Ordinal logistic regression is a widely used classification method, with applications in variety of domains. This method is the go-to tool when there is a natural ordering in the dependent variable. For example, dependent variable with levels low, medium, high is a perfect context for application of logistic ordinal regression.

Having wide range of applicability, ordinal logistic regression is considered as one of the most admired methods in the field of data analytics. The method is also known as proportional odds model because of the transformations used during estimation and the log odds interpretation of the output. We hope that this article helps our readers to understand the basics and implement the model in R. The article is organized as follows: focusing on the theoretical aspects of the technique, section 1 provides a quick review of ordinal logistic regression. Section 2 discusses the steps to perform ordinal logistic regression in R and shares R script. In addition, section 2 also covers the basics of interpretation and evaluation of the model on R. In section 3, we learn a more intuitive way to interpret the model. Section 4 concludes the article.

Basics of ordinal logistic regression

Ordinal logistic regression is an extension of simple logistic regression model. In simple logistic regression, the dependent variable is categorical and follows a Bernoulli distribution. (for a quick reference check out this article by perceptive analytics – https://www.kdnuggets.com/2017/10/learn-generalized-linear-models-glm-r.html). Whereas, in ordinal logistic regression the dependent variable is ordinal i.e. there is an explicit ordering in the categories. For example, during preliminary testing of a pain relief drug, the participants are asked to express the amount of relief they feel on a five point Likert scale. Another common example of an ordinal variable is app ratings. On google play, customers are asked to rate apps on a scale ranging from 1 to 5. Ordinal logistic regression becomes handy in the aforementioned examples as there is a clear order in the categorical dependent variable.

In simple logistic regression, log of odds that an event occurs is modeled as a linear combination of the independent variables. But, the above approach of modeling ignores the ordering of the categorical dependent variable. Ordinal logistic regression model overcomes this limitation by using cumulative events for the log of the odds computation. It means that unlike simple logistic regression, ordinal logistic models consider the probability of an event and all the events that are below the focal event in the ordered hierarchy. For example, the event of interest in ordinal logistic regression would be to obtain an app rating equal to X or less than X. For example, the log of odds for the app rating less than or equal to 1 would be computed as follows:

LogOdds rating<1 = Log (p(rating=1)/p(rating>1) [Eq. 1]

Likewise, the log of odds can be computed for other values of app ratings. The computations for other ratings are below: LogOdds rating<2 = Log (p(rating<=2)/p(rating>2) [Eq. 2] LogOdds rating<3 = Log (p(rating<=3)/p(rating>3) [Eq. 3] LogOdds rating<4 = Log (p(rating=4)/p(rating>4) [Eq. 4]

Because all the ratings below the focal score are considered in computation, the highest app rating of 5 will include all the ratings below it and does not have a log of odds associated with it. In general, the ordinal regression model can be represented using the LogOdds computation.

LogoddsY = αi+ β1X1 +β2X2 +….. +βnXn

where,

Y is the ordinal dependent variable

i is the number of categories minus 1

X1, X2,…. Xn are independent variables. They can be measured on nominal, ordinal or continuous measurement scale.

β1, β2,… βn are estimated parameters For i ordered categories, we obtain i – 1 equations. The proportional odds assumption implies that the effect of independent variables is identical for each log of odds computation. But, this is not the case for intercept as the intercept takes different values for each computation. Besides the proportional odds assumption, the ordinal logistic regression model assumes an ordinal dependent variable and absence of multicollinearity. Absence of multicollinearity means that the independent variables are not significantly correlated. These assumptions are important as their violation makes the computed parameters unacceptable.

Model building in R In this section, we describe the dataset and implement ordinal logistic regression in R. We use a simulated dataset for analysis. The details of the variables are as follows. The objective of the analysis is to predict the likelihood of each level of customer purchase. The dependent variable is the likelihood of repeated purchase by customers. The variable is measured in an ordinal scale and can be equal to one of the three levels – low probability, medium probability, and high probability. The independent variables are measures of possession of coupon by the focal customer, recommendation received by the peers and quality of the product. Possession of coupon and peer recommendation are categorical variables, while quality is measured on a scale of 1 to 5. We discuss the steps for implementation of ordinal logistic regression and share the commented R script for better understanding of the reader. The data is in .csv format and can be downloaded by clicking here. Before starting the analysis, I will describe the preliminary steps in short. The first step is to keep the data file in the working directory. The next step is to explicitly define the ordering of the levels in the dependent variable and the relevant independent variables. This step is crucial and ignoring it can lead to meaningless analysis.

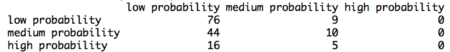

Table 1. Count of rpurchase by coupon

Table 1. Count of rpurchase by coupon

After defining the order of the levels in the dependent variable and performing exploratory data analysis, the data is ready to be partitioned into training and testing set. We will build the model using the training set and validate the computed model using the data in test set. The partitioning of the data into training and test set is random. The random sample is generated using sample ( ) function along with set.seed ( ) function. The R script for explicitly stating the order of levels in the dependent variable and creating training and test data set is follows:

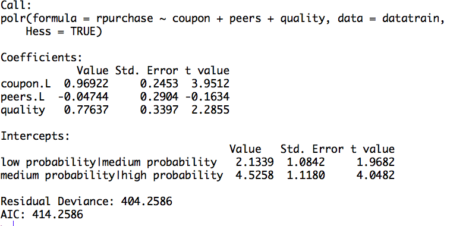

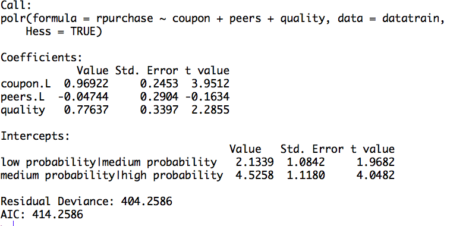

The table displays the value of coefficients and intercepts, and corresponding standard errors and t values. The interpretation for the coefficients is as follows. For example, holding everything else constant, an increase in value of coupon by one unit increase the expected value of rpurchase in log odds by 0.96. Likewise, the coefficients of peers and quality can be interpreted.

Note that the ordinal logistic regression outputs multiple values of intercepts depending on the levels of intercept. The intercepts can be interpreted as the expected odds when others variables assume a value of zero. For example, the low probability | medium probability intercept takes value of 2.13, indicating that the expected odds of identifying in low probability category, when other variables assume a value of zero, is 2.13. Using the logit inverse transformation, the intercepts can be interpreted in terms of expected probabilities. The inverse logit transformation, <to be inserted> . The expected probability of identifying low probability category, when other variables assume a value of zero, is 0.89. After building the model and interpreting the model, the next step is to evaluate it. The evaluation of the model is conducted on the test dataset. A basic evaluation approach is to compute the confusion matrix and the misclassification error. The R code and the results are as follows:

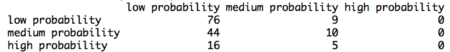

The confusion matrix shows the performance of the ordinal logistic regression model. For example, it shows that, in the test dataset, 76 times low probability category is identified correctly. Similarly, 10 times medium category and 0 times high category is identified correctly. We observe that the model identifies high probability category poorly. This happens because of inadequate representation of high probability category in the training dataset. Using the confusion matrix, we find that the misclassification error for our model is 46%.

The confusion matrix shows the performance of the ordinal logistic regression model. For example, it shows that, in the test dataset, 76 times low probability category is identified correctly. Similarly, 10 times medium category and 0 times high category is identified correctly. We observe that the model identifies high probability category poorly. This happens because of inadequate representation of high probability category in the training dataset. Using the confusion matrix, we find that the misclassification error for our model is 46%.

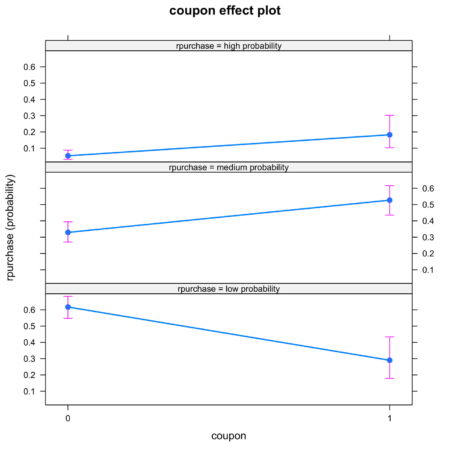

Interpretation using plots

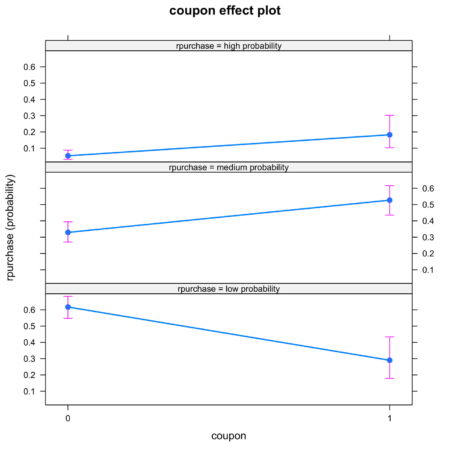

The interpretation of the logistic ordinal regression in terms of log odds ratio is not easy to understand. We offer an alternative approach to interpretation using plots. The R code for plotting the effects of the independent variables is as follows:

Figure 4. Effect of coupon on identification

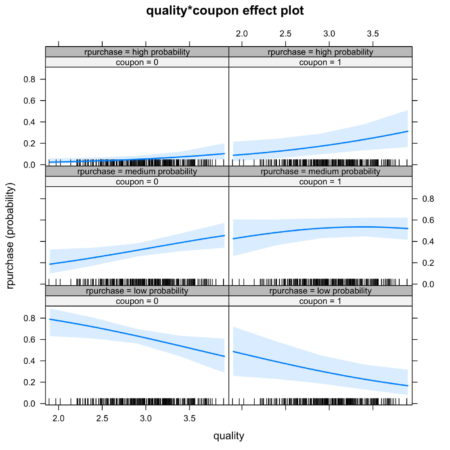

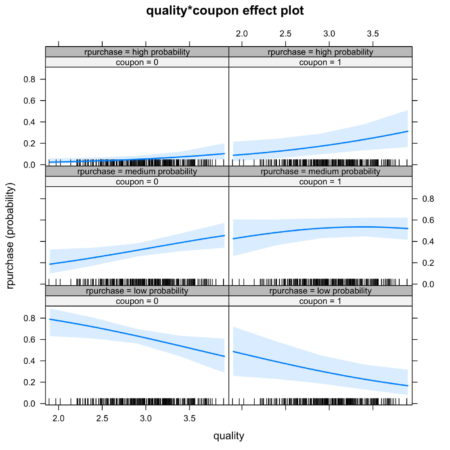

It is also possible to look at joint effect of two independent variable. Figure 5. shows the joint effect of quality and coupon on identification of category of independent variable. Observing the top row of figure 5., we notice that the interaction of coupon and quality increases the likelihood of identification in high probability category.

Figure 5. Joint effect of quality and coupon on identification

Conclusion The article discusses the fundamentals of ordinal logistic regression, builds and the model in R, and ends with interpretation and evaluation. Ordinal logistic regression extends the simple logistic regression model to the situations where the dependent variable is ordinal, i.e. can be ordered. Ordinal logistic regression has variety of applications, for example, it is often used in marketing to increase customer life time value. For example, consumers can be categorized into different classes based on their tendency to make repeated purchase decision. In order to discuss the model in an applied manner, we develop this article around the case of consumer categorization. The independent variables of interest are – coupon held by consumers from previous purchase, influence of peers, quality of the product. The article has two key takeaways. First, ordinal logistic regression come handy while dealing with a dependent variable that can be ordered. If one uses multinomial logistic regression then the user is ignoring the information related to ordering of the dependent variable. Second, the coefficients of the ordinal linear regression cannot be interpreted in a similar manner to the coefficients of ordinary linear regression. Interpreting the coefficents in terms of marginal effects is one of the common mistakes that users make while implementing the ordinal regression model. We again emphasize the use of graphical method to interpret the coefficients. Using the graphical method, it is easy to understand the individual and joint effects of independent variables on the likelihood of classification. This article can be a go to reference for understanding the basics of ordinal logistic regression and its implementation in R. We have provided commented R code throughout the article.

Download R-code

Credits: Chaitanya Sagar and Aman Asija of Perceptive Analytics. Perceptive Analytics is a marketing analytics and Tableau consulting company.

Having wide range of applicability, ordinal logistic regression is considered as one of the most admired methods in the field of data analytics. The method is also known as proportional odds model because of the transformations used during estimation and the log odds interpretation of the output. We hope that this article helps our readers to understand the basics and implement the model in R. The article is organized as follows: focusing on the theoretical aspects of the technique, section 1 provides a quick review of ordinal logistic regression. Section 2 discusses the steps to perform ordinal logistic regression in R and shares R script. In addition, section 2 also covers the basics of interpretation and evaluation of the model on R. In section 3, we learn a more intuitive way to interpret the model. Section 4 concludes the article.

Basics of ordinal logistic regression

Ordinal logistic regression is an extension of simple logistic regression model. In simple logistic regression, the dependent variable is categorical and follows a Bernoulli distribution. (for a quick reference check out this article by perceptive analytics – https://www.kdnuggets.com/2017/10/learn-generalized-linear-models-glm-r.html). Whereas, in ordinal logistic regression the dependent variable is ordinal i.e. there is an explicit ordering in the categories. For example, during preliminary testing of a pain relief drug, the participants are asked to express the amount of relief they feel on a five point Likert scale. Another common example of an ordinal variable is app ratings. On google play, customers are asked to rate apps on a scale ranging from 1 to 5. Ordinal logistic regression becomes handy in the aforementioned examples as there is a clear order in the categorical dependent variable.

In simple logistic regression, log of odds that an event occurs is modeled as a linear combination of the independent variables. But, the above approach of modeling ignores the ordering of the categorical dependent variable. Ordinal logistic regression model overcomes this limitation by using cumulative events for the log of the odds computation. It means that unlike simple logistic regression, ordinal logistic models consider the probability of an event and all the events that are below the focal event in the ordered hierarchy. For example, the event of interest in ordinal logistic regression would be to obtain an app rating equal to X or less than X. For example, the log of odds for the app rating less than or equal to 1 would be computed as follows:

LogOdds rating<1 = Log (p(rating=1)/p(rating>1) [Eq. 1]

Likewise, the log of odds can be computed for other values of app ratings. The computations for other ratings are below: LogOdds rating<2 = Log (p(rating<=2)/p(rating>2) [Eq. 2] LogOdds rating<3 = Log (p(rating<=3)/p(rating>3) [Eq. 3] LogOdds rating<4 = Log (p(rating=4)/p(rating>4) [Eq. 4]

Because all the ratings below the focal score are considered in computation, the highest app rating of 5 will include all the ratings below it and does not have a log of odds associated with it. In general, the ordinal regression model can be represented using the LogOdds computation.

LogoddsY = αi+ β1X1 +β2X2 +….. +βnXn

where,

Y is the ordinal dependent variable

i is the number of categories minus 1

X1, X2,…. Xn are independent variables. They can be measured on nominal, ordinal or continuous measurement scale.

β1, β2,… βn are estimated parameters For i ordered categories, we obtain i – 1 equations. The proportional odds assumption implies that the effect of independent variables is identical for each log of odds computation. But, this is not the case for intercept as the intercept takes different values for each computation. Besides the proportional odds assumption, the ordinal logistic regression model assumes an ordinal dependent variable and absence of multicollinearity. Absence of multicollinearity means that the independent variables are not significantly correlated. These assumptions are important as their violation makes the computed parameters unacceptable.

Model building in R In this section, we describe the dataset and implement ordinal logistic regression in R. We use a simulated dataset for analysis. The details of the variables are as follows. The objective of the analysis is to predict the likelihood of each level of customer purchase. The dependent variable is the likelihood of repeated purchase by customers. The variable is measured in an ordinal scale and can be equal to one of the three levels – low probability, medium probability, and high probability. The independent variables are measures of possession of coupon by the focal customer, recommendation received by the peers and quality of the product. Possession of coupon and peer recommendation are categorical variables, while quality is measured on a scale of 1 to 5. We discuss the steps for implementation of ordinal logistic regression and share the commented R script for better understanding of the reader. The data is in .csv format and can be downloaded by clicking here. Before starting the analysis, I will describe the preliminary steps in short. The first step is to keep the data file in the working directory. The next step is to explicitly define the ordering of the levels in the dependent variable and the relevant independent variables. This step is crucial and ignoring it can lead to meaningless analysis.

#Read data file from working directory

setwd("C:/Users/You/Desktop")

data <- read.table("data.txt")

#Ordering the dependent variable

data$rpurchase = factor(data$rpurchase, levels = c("low probability", "medium probability", "high probability"), ordered = TRUE)

data$peers = factor(data$peers, levels = c("0", "1"), ordered = TRUE)

data$coupon = factor(data$coupon, levels = c("0", "1"), ordered = TRUE)

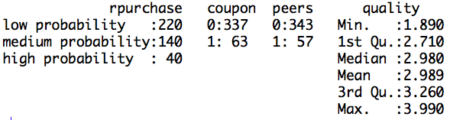

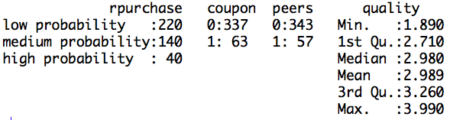

Next, it is essential to perform the exploratory data analysis. Exploratory data analysis deals with outliers and missing values, which can induce bias in our model. In this article we do basic exploration by looking at summary statistics and frequency table. Figure 1. shows the summary statistics. We observe the count of data for ordinal variables and distribution characteristics for other variables. We compute the count of rpurchase with different values of coupon in Table 1. Note that the median value for rpurchase changes with change in coupon. The median level of rpurchase increases, indicating that coupon positively affects the likelihood of repeated purchase. The R script for summary statistics and the frequency table is as follows:#Exploratory data analysis

#Summarizing the data

summary(data)

#Making frequency table

table(data$rpurchase, data$coupon)

Figure 1. Summary statistics

Table 1. Count of rpurchase by coupon

Table 1. Count of rpurchase by coupon

| Rpurchase/Coupon | With coupon | Without coupon |

| Low probability | 200 | 20 |

| Medium probability | 110 | 30 |

| High proabability | 27 | 13 |

#Dividing data into training and test set #Random sampling samplesize = 0.60*nrow(data) set.seed(100) index = sample(seq_len(nrow(data)), size = samplesize) #Creating training and test set datatrain = data[index,] datatest = data[-index,]Now, we will build the model using the data in training set. As discussed in the above section the dependent variable in the model is in the form of log odds. Because of the log odds transformation, it is difficult to interpret the coefficients of the model. Note that in this case the coefficients of the regression cannot be interpreted in terms of marginal effects. The coefficients are called as proportional odds and interpreted in terms of increase in log odds. The interpretation changes not only for the coefficients but also for the intercept. Unlike simple linear regression, in ordinal logistic regression we obtain n-1 intercepts, where n is the number of categories in the dependent variable. The intercept can be interpreted as the expected odds of identifying in the listed categories. Before interpreting the model, we share the relevant R script and the results. In the R code, we set Hess equal to true which the logical operator to return hessian matrix. Returning the hessian matrix is essential to use summary function or calculate variance-covariance matrix of the fitted model.

#Build ordinal logistic regression model model= polr(rpurchase ~ coupon + peers + quality , data = datatrain, Hess = TRUE) summary(model)Figure 2. Ordinal logistic regression model on training set

The table displays the value of coefficients and intercepts, and corresponding standard errors and t values. The interpretation for the coefficients is as follows. For example, holding everything else constant, an increase in value of coupon by one unit increase the expected value of rpurchase in log odds by 0.96. Likewise, the coefficients of peers and quality can be interpreted.

Note that the ordinal logistic regression outputs multiple values of intercepts depending on the levels of intercept. The intercepts can be interpreted as the expected odds when others variables assume a value of zero. For example, the low probability | medium probability intercept takes value of 2.13, indicating that the expected odds of identifying in low probability category, when other variables assume a value of zero, is 2.13. Using the logit inverse transformation, the intercepts can be interpreted in terms of expected probabilities. The inverse logit transformation, <to be inserted> . The expected probability of identifying low probability category, when other variables assume a value of zero, is 0.89. After building the model and interpreting the model, the next step is to evaluate it. The evaluation of the model is conducted on the test dataset. A basic evaluation approach is to compute the confusion matrix and the misclassification error. The R code and the results are as follows:

#Compute confusion table and misclassification error predictrpurchase = predict(model,datatest) table(datatest$rpurchase, predictrpurchase) mean(as.character(datatest$rpurchase) != as.character(predictrpurchase))Figure 3. Confusion matrix

The confusion matrix shows the performance of the ordinal logistic regression model. For example, it shows that, in the test dataset, 76 times low probability category is identified correctly. Similarly, 10 times medium category and 0 times high category is identified correctly. We observe that the model identifies high probability category poorly. This happens because of inadequate representation of high probability category in the training dataset. Using the confusion matrix, we find that the misclassification error for our model is 46%.

The confusion matrix shows the performance of the ordinal logistic regression model. For example, it shows that, in the test dataset, 76 times low probability category is identified correctly. Similarly, 10 times medium category and 0 times high category is identified correctly. We observe that the model identifies high probability category poorly. This happens because of inadequate representation of high probability category in the training dataset. Using the confusion matrix, we find that the misclassification error for our model is 46%. Interpretation using plots

The interpretation of the logistic ordinal regression in terms of log odds ratio is not easy to understand. We offer an alternative approach to interpretation using plots. The R code for plotting the effects of the independent variables is as follows:

#Plotting the effects

library("effects")

Effect(focal.predictors = "quality",model)

plot(Effect(focal.predictors = "coupon",model))

plot(Effect(focal.predictors = c("quality", "coupon"),model))

The plots are intuitive and easy to understand. For example, figure 4 shows that coupon increases the likelihood of classification into high probability and medium probability classes, while decreasing the likelihood of classification in low probability class.Figure 4. Effect of coupon on identification

It is also possible to look at joint effect of two independent variable. Figure 5. shows the joint effect of quality and coupon on identification of category of independent variable. Observing the top row of figure 5., we notice that the interaction of coupon and quality increases the likelihood of identification in high probability category.

Figure 5. Joint effect of quality and coupon on identification

Conclusion The article discusses the fundamentals of ordinal logistic regression, builds and the model in R, and ends with interpretation and evaluation. Ordinal logistic regression extends the simple logistic regression model to the situations where the dependent variable is ordinal, i.e. can be ordered. Ordinal logistic regression has variety of applications, for example, it is often used in marketing to increase customer life time value. For example, consumers can be categorized into different classes based on their tendency to make repeated purchase decision. In order to discuss the model in an applied manner, we develop this article around the case of consumer categorization. The independent variables of interest are – coupon held by consumers from previous purchase, influence of peers, quality of the product. The article has two key takeaways. First, ordinal logistic regression come handy while dealing with a dependent variable that can be ordered. If one uses multinomial logistic regression then the user is ignoring the information related to ordering of the dependent variable. Second, the coefficients of the ordinal linear regression cannot be interpreted in a similar manner to the coefficients of ordinary linear regression. Interpreting the coefficents in terms of marginal effects is one of the common mistakes that users make while implementing the ordinal regression model. We again emphasize the use of graphical method to interpret the coefficients. Using the graphical method, it is easy to understand the individual and joint effects of independent variables on the likelihood of classification. This article can be a go to reference for understanding the basics of ordinal logistic regression and its implementation in R. We have provided commented R code throughout the article.

Download R-code

Credits: Chaitanya Sagar and Aman Asija of Perceptive Analytics. Perceptive Analytics is a marketing analytics and Tableau consulting company.

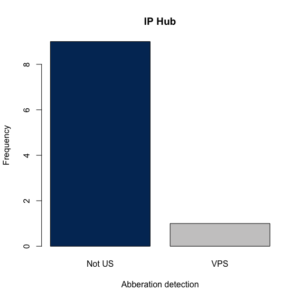

A New Release of rIP (v1.2.0) for Detecting Fraud in Online Surveys

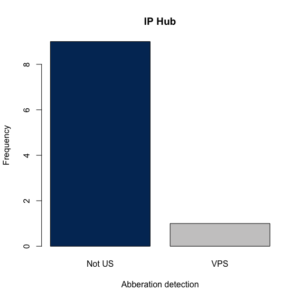

We are excited to announce the latest major release of rIP (v1.2.0), which is an R package that detects fraud in online surveys by tracing, scoring, and visualizing IP addresses. Essentially, rIP takes an array of IP addresses, which are always captured in online surveys (e.g., MTurk), and the keys for the services the user wishes to use (IP Hub, IP Intel, and Proxycheck), and passes these to all respective APIs. The output is a dataframe with the IP addresses, country, internet service provider (ISP), labels for non-US IP Addresses, whether a virtual private server (VPS) was used, and then recommendations for blocking the IP address. Users also have the option to visualize the distributions, as discussed below in the updates to v1.2.0.

Especially important in this is the variable “block”, which gives a score indicating whether the IP address is likely from a “server farm” and should be excluded from the data. It is coded 0 if the IP is residential/unclassified (i.e. safe IP), 1 if the IP is non-residential IP (hostping provider, proxy, etc. – should likely be excluded, though the decision to do so is left to the researcher), and 2 for non-residential and residential IPs (more stringent, may flag innocent respondents).

Including some great contributions from Bob Rudis, some of the key feature updates included in v1.2.0 of rIP are:

Note that to use the package, users must have valid personal keys for any IP service they wish to call via the function. These can be obtained for free at the corresponding IP check services.

Finally, we welcome contributions and reports of bugs either by opening an issue ticket or a pull request at the corresponding Github repository. Thanks and enjoy the package!

Especially important in this is the variable “block”, which gives a score indicating whether the IP address is likely from a “server farm” and should be excluded from the data. It is coded 0 if the IP is residential/unclassified (i.e. safe IP), 1 if the IP is non-residential IP (hostping provider, proxy, etc. – should likely be excluded, though the decision to do so is left to the researcher), and 2 for non-residential and residential IPs (more stringent, may flag innocent respondents).

Including some great contributions from Bob Rudis, some of the key feature updates included in v1.2.0 of rIP are:

- Added discrete API endpoints for the three IP services so users can use this as a general purpose utility package as well as for the task-specific functionality currently provided. Each endpoint is tied to an environment variable for the secret info (API key or contact info). This is documented in each function.

- On-load computed package global .RIP_UA which is an httr user_agent object, given the best practice to use an identifiable user agent when making API calls so the service provider can track usage and also follow up with any issues they see.

- A new plotting option that, when set to “TRUE”, produces a barplot of the IP addresses checked with color generated via the amerika package.

- Users can now supply any number of IP service keys they wish to use (1, 2, or all 3), and the function will ping only the preferred IP check services (formerly, the package required all three keys or none to be entered).

- For those interested in reading more and citing the package in published work, check out our recently published software paper in the Journal of Open Source Software.

# Install and load rIP, v1.2.0

install.packages("rIP")

library(rIP)

# Store personal keys (only "IP Hub" used here)

ip_hub_key = "MzI2MTpkOVpld3pZTVg1VmdTV3ZPenpzMmhodkJmdEpIMkRMZQ=="

ipsample = data.frame(rbind(c(1, "15.79.157.16"), c(2, "1.223.176.227"), c(3, "72.167.36.25"), c(4, "5.195.165.176"),

c(5, "27.28.25.206"), c(6, "106.118.241.121"), c(7, "231.86.14.33"), c(8, "42.56.9.80"), c(9, "41.42.62.229"),

c(10, "183.124.243.176")))

names(ipsample) = c("number", "IPAddress")

# Call the getIPinfo function to check the IPs

getIPinfo(ipsample, "IPAddress", iphub_key = ip_hub_key, plots = TRUE)

Running the code above will generate the following plot, as well as the dataframe mentioned above.

Note that to use the package, users must have valid personal keys for any IP service they wish to call via the function. These can be obtained for free at the corresponding IP check services.

Finally, we welcome contributions and reports of bugs either by opening an issue ticket or a pull request at the corresponding Github repository. Thanks and enjoy the package!

Workshop – R Bootcamp: For Newcomers to R

Sunday, June 16, 2019 in Las Vegas

Two and a half hour workshop: 2:00pm-4:30pm

Intended Audience:Practitioners who wish to learn the nuts and bolts of the R language; anyone who wants “to turn ideas into software, quickly and faithfully.” Knowledge Level: Experience with handling data (e.g. spreadsheets) or hands-on familiarity with any programming language.

Workshop Description

This afternoon workshop launches your tenure as a user of R, the well-known open-source platform for data science and machine learning. The workshop stands alone as the perfect way to get started with R, or may serve to prepare for the more advanced full-day hands-on workshop, “R for Predictive Modeling”. Designed for newcomers to the language of R, “R Bootcamp” covers the R ecosystem and core elements of the language, so you attain the foundations for becoming an R user. Topics include common tools for data import, manipulation and export. If time allows, other topics will be covered, such: as graphical systems in R (e.g. LATTICE and GGPLOT) and automated reporting. The instructor will guide attendees on hands-on execution with R, covering:- A working knowledge of the R system

- The strengths and limitations of the R language

- Core language features

- The best tools for merging, processing and arranging data

Workshop – R for Machine Learning: A Hands-On Introduction

Monday, June 17, 2019 in Las Vegas

Full-day Workshop: 8:30am – 4:30pm

Intended Audience:Practitioners who wish to learn how to execute on predictive analytics by way of the R language; anyone who wants “to turn ideas into software, quickly and faithfully.” Knowledge Level: Either hands-on experience with predictive modeling (without R) or both hands-on familiarity with any programming language (other than R) and basic conceptual knowledge about predictive modeling is sufficient background and preparation to participate in this workshop. The 2 1/2 hour “R Bootcamp” is recommended preparation for this workshop.

This one-day session provides a hands-on introduction to R, the well-known open-source platform for data analysis. Real examples are employed in order to methodically expose attendees to best practices driving R and its rich set of predictive modeling (machine learning) packages, providing hands-on experience and know-how. R is compared to other data analysis platforms, and common pitfalls in using R are addressed.

The instructor will guide attendees on hands-on execution with R, covering:

Each workshop participant is required to bring their own laptop running Windows or OS X. The software used during this training program, R, is free and readily available for download. Attendees receive an electronic copy of the course materials and related R code at the conclusion of the workshop.

- A working knowledge of the R system

- The strengths and limitations of the R language

- Preparing data with R, including splitting, resampling and variable creation

- Developing predictive models with R, including the use of these machine learning methods: decision trees, ensemble methods, and others.

- Visualization: Exploratory Data Analysis (EDA), and tools that persuade

- Evaluating predictive models, including viewing lift curves, variable importance and avoiding overfitting

Each workshop participant is required to bring their own laptop running Windows or OS X. The software used during this training program, R, is free and readily available for download. Attendees receive an electronic copy of the course materials and related R code at the conclusion of the workshop.

Schedule

- Workshop starts at 8:30am

- Morning Coffee Break at 10:30am – 11:00am

- Lunch provided at 12:30pm – 1:15pm

- Afternoon Coffee Break at 3:00pm – 3:30pm

- End of the Workshop: 4:30pm

Instructor

Brennan Lodge, Data Scientist VP, Goldman Sachs

Brennan is a self-proclaimed data nerd. He has been working in the financial industry for the past ten years and is striving to save the world with a little help from our machine friends. He has held cyber security, data scientist, and leadership roles at JP Morgan Chase, the Federal Reserve Bank of New York, Bloomberg, and Goldman Sachs. Brennan holds a Masters in Business Analytics from New York University and participates in the data science community with his non-profit pro-bono work at DataKind, and as a co-organizer for the NYU Data Science and Analytics Meetup. Brennan is also an instructor at the New York Data Science Academy and teaches data science courses in R and Python.Introduction to statistics with origami (and R examples)

I have written a small booklet (about 120 pages A5 size) on statistics and origami

it is a very simple -basic book, useful for introductory courses.

There are several R examples and the R script for each of them

You can downolad the files free from:

http://www.mariocigada.com/mariodocs/origanova_e.html

in a .zip file you find

the text in .pdf format

a colour cover

a (small) dataset

a .R file with all the script

Here is a link for downloading the book directly, I hope someone can find it useful:

Link for downloading the book: origanova2en

ciao

m

it is a very simple -basic book, useful for introductory courses.

There are several R examples and the R script for each of them

You can downolad the files free from:

http://www.mariocigada.com/mariodocs/origanova_e.html

in a .zip file you find

the text in .pdf format

a colour cover

a (small) dataset

a .R file with all the script

Here is a link for downloading the book directly, I hope someone can find it useful:

Link for downloading the book: origanova2en

ciao

m

ODSC East 2019 Talks to Expand and Apply R Skills

R programmers are not necessary data scientists, but rather software engineers. We have an entirely new multitrack focus area that helps engineers learn AI skills – AI for Engineers. This focus area is designed specifically to help programmers get familiar with AI-driven software that utilizes deep learning and machine learning models to enable conversational AI, autonomous machines, machine vision, and other AI technologies that require serious engineering.

For example, some use R in reinforcement learning – a popular topic and arguably one of the best techniques for sequential decision making and control policies. At ODSC East, Leonardo De Marchi of Badoo will present “Introduction to Reinforcement Learning,” where he will go over the fundamentals of RL all the way to creating unique algorithms, and everything in between.

Well, what if you’re missing data? That will mess up your entire workflow – R or otherwise. In “Good, Fast, Cheap: How to do Data Science with Missing Data,” Matt Brems of General Assembly, you will start by visualizing missing data and identifying the three different types of missing data, which will allow you to see how they affect whether we should avoid, ignore, or account for the missing data. Matt will give you practical tips for working with missing data and recommendations for integrating it with your workflow.

How about getting some context? In “Intro to Technical Financial Evaluation with R” with Ted Kwartler of Liberty Mutual and Harvard Extension School, you will learn how to download and evaluate equities with the TTR (technical trading rules) package. You will evaluate an equity according to three basic indicators and introduce you to backtesting for more sophisticated analyses on your own.

There is a widespread belief that the twin modeling goals of prediction and explanation are in conflict. That is, if one desires superior predictive power, then by definition one must pay a price of having little insight into how the model made its predictions. In “Explaining XGBoost Models – Tools and Methods” with Brian Lucena, PhD, Consulting Data Scientist at Agentero you will work hands-on using XGBoost with real-world data sets to demonstrate how to approach data sets with the twin goals of prediction and understanding in a manner such that improvements in one area yield improvements in the other.

What about securing your deep learning frameworks? In “Adversarial Attacks on Deep Neural Networks” with Sihem Romdhani, Software Engineer at Veeva Systems, you will answer questions such as how do adversarial attacks pose a real-world security threat? How can these attacks be performed? What are the different types of attacks? What are the different defense techniques so far and how to make a system more robust against adversarial attacks? Get these answers and more here.

Data science is an art. In “Data Science + Design Thinking: A Perfect Blend to Achieve the Best User Experience,” Michael Radwin, VP of Data Science at Intuit, will offer a recipe for how to apply design thinking to the development of AI/ML products. You will learn to go broad to go narrow, focusing on what matters most to customers, and how to get customers involved in the development process by running rapid experiments and quick prototypes.

Lastly, your data and hard work mean nothing if you don’t do anything with it – that’s why the term “data storytelling” is more important than ever. In “Data Storytelling: The Essential Data Science Skill,” Isaac Reyes, TedEx speaker and founder of DataSeer, will discuss some of the most important of data storytelling and visualization, such as what data to highlight, how to use colors to bring attention to the right numbers, the best charts for each situation, and more.

Ready to apply all of your R skills to the above situations? Learn more techniques, applications, and use cases at ODSC East 2019 in Boston this April 30 to May 3! Save 10% off the public ticket price when you use the code RBLOG10 today. Register Here

For example, some use R in reinforcement learning – a popular topic and arguably one of the best techniques for sequential decision making and control policies. At ODSC East, Leonardo De Marchi of Badoo will present “Introduction to Reinforcement Learning,” where he will go over the fundamentals of RL all the way to creating unique algorithms, and everything in between.

Well, what if you’re missing data? That will mess up your entire workflow – R or otherwise. In “Good, Fast, Cheap: How to do Data Science with Missing Data,” Matt Brems of General Assembly, you will start by visualizing missing data and identifying the three different types of missing data, which will allow you to see how they affect whether we should avoid, ignore, or account for the missing data. Matt will give you practical tips for working with missing data and recommendations for integrating it with your workflow.

How about getting some context? In “Intro to Technical Financial Evaluation with R” with Ted Kwartler of Liberty Mutual and Harvard Extension School, you will learn how to download and evaluate equities with the TTR (technical trading rules) package. You will evaluate an equity according to three basic indicators and introduce you to backtesting for more sophisticated analyses on your own.

There is a widespread belief that the twin modeling goals of prediction and explanation are in conflict. That is, if one desires superior predictive power, then by definition one must pay a price of having little insight into how the model made its predictions. In “Explaining XGBoost Models – Tools and Methods” with Brian Lucena, PhD, Consulting Data Scientist at Agentero you will work hands-on using XGBoost with real-world data sets to demonstrate how to approach data sets with the twin goals of prediction and understanding in a manner such that improvements in one area yield improvements in the other.

What about securing your deep learning frameworks? In “Adversarial Attacks on Deep Neural Networks” with Sihem Romdhani, Software Engineer at Veeva Systems, you will answer questions such as how do adversarial attacks pose a real-world security threat? How can these attacks be performed? What are the different types of attacks? What are the different defense techniques so far and how to make a system more robust against adversarial attacks? Get these answers and more here.

Data science is an art. In “Data Science + Design Thinking: A Perfect Blend to Achieve the Best User Experience,” Michael Radwin, VP of Data Science at Intuit, will offer a recipe for how to apply design thinking to the development of AI/ML products. You will learn to go broad to go narrow, focusing on what matters most to customers, and how to get customers involved in the development process by running rapid experiments and quick prototypes.

Lastly, your data and hard work mean nothing if you don’t do anything with it – that’s why the term “data storytelling” is more important than ever. In “Data Storytelling: The Essential Data Science Skill,” Isaac Reyes, TedEx speaker and founder of DataSeer, will discuss some of the most important of data storytelling and visualization, such as what data to highlight, how to use colors to bring attention to the right numbers, the best charts for each situation, and more.

Ready to apply all of your R skills to the above situations? Learn more techniques, applications, and use cases at ODSC East 2019 in Boston this April 30 to May 3! Save 10% off the public ticket price when you use the code RBLOG10 today. Register Here

More on ODSC:

ODSC East 2019 is one of the largest applied data science conferences in the world. Speakers include some of the core contributors to many open source tools, libraries, and languages. Attend ODSC East in Boston this April 30 to May 3 and learn the latest AI & data science topics, tools, and languages from some of the best and brightest minds in the field.Coke vs. Pepsi? data.table vs. tidy? Examining Consumption Preferences for Data Scientists

By Beth Milhollin ([email protected]), Russell Zaretzki ([email protected]), and Audris Mockus ([email protected])

Coke and Pepsi have been fighting the cola wars for over one hundred years and, by now, if you are still willing to consume soft drinks, you are either a Coke person or a Pepsi person. How did you choose? Why are you so convinced and passionate about the choice? Which side is winning and why? Similarly, some users of R prefer enhancements to data frame provided by data.table while others lean towards tidy-related framework. Both popular R packages provide functionality that is lacking in the built-in R data frame. While the competition between them spans much less than one hundred years, can we draw similarities and learn from the deeper understanding of consumption choices exhibited in Coke vs Pepsi battle? One of the basic measures of consumer enthusiasm is a so-called Net Promoter Score (NPS).

In the business world, the NPS is widely used for products and services and it can be a leading indicator for market share growth. One simple question is the basis for the NPS – How likely are you to recommend the brand to your friends or colleagues, using a scale from 0 to 10? Respondents who give a score of 0-6 belong to the Detractors group. These unhappy customers will tend to voice their displeasure and can damage a brand. The Passive group gives a score of 7-8, and they are generally satisfied, but they are also open to changing brands. The Promoters are the loyal enthusiasts who give a score of 9-10, and these coveted cheerleaders will fuel the growth of a brand. To calculate the NPS, subtract the percentage of Detractors from the percentage of Promoters. A minimum score of -100 means everyone is a Detractor, and the elusive maximum score of +100 means everyone is a Promoter.

How does the NPS play out in the cola wars? For 2019 there is a standoff, with Coca-cola and Pepsi tied at 20. Instinct tells us that a NPS over 0 is good, but how good? The industry average for fast moving consumer goods is 30 (“Pepsi Net Promoter Score 2019 Benchmarks”, 2019). We will let you be the judge.

In the Fall of 2018 we surveyed R users identified by the first commit that added a dependency on either package to a public repository (a repository hosted on GitHub, BitBucket, and other so-called software forges) with some code written in R language. The results of the full survey can be seen here. The responding project contributors were identified as “data.table” users or “tidy” users by their inclusion of the corresponding R libraries in their projects. The survey responses were used to obtain a Net Promoter Score.

Unlike the cola wars, the results of the survey indicate that data.table has an industry average NPS of 28.6, while tidy brings home the gold with a 59.4. What makes tidy users so much more enthusiastic in their choice of data structure library? Tune in for next installment where we try to track down what factors drive a users choice.

References: “Pepsi Net Promoter Score 2019 Benchmarks.” Pepsi Net Promoter Score 2019 Benchmarks | Customer.guru, customer.guru/net-promoter-score/pepsi.

Coke and Pepsi have been fighting the cola wars for over one hundred years and, by now, if you are still willing to consume soft drinks, you are either a Coke person or a Pepsi person. How did you choose? Why are you so convinced and passionate about the choice? Which side is winning and why? Similarly, some users of R prefer enhancements to data frame provided by data.table while others lean towards tidy-related framework. Both popular R packages provide functionality that is lacking in the built-in R data frame. While the competition between them spans much less than one hundred years, can we draw similarities and learn from the deeper understanding of consumption choices exhibited in Coke vs Pepsi battle? One of the basic measures of consumer enthusiasm is a so-called Net Promoter Score (NPS).

In the business world, the NPS is widely used for products and services and it can be a leading indicator for market share growth. One simple question is the basis for the NPS – How likely are you to recommend the brand to your friends or colleagues, using a scale from 0 to 10? Respondents who give a score of 0-6 belong to the Detractors group. These unhappy customers will tend to voice their displeasure and can damage a brand. The Passive group gives a score of 7-8, and they are generally satisfied, but they are also open to changing brands. The Promoters are the loyal enthusiasts who give a score of 9-10, and these coveted cheerleaders will fuel the growth of a brand. To calculate the NPS, subtract the percentage of Detractors from the percentage of Promoters. A minimum score of -100 means everyone is a Detractor, and the elusive maximum score of +100 means everyone is a Promoter.

How does the NPS play out in the cola wars? For 2019 there is a standoff, with Coca-cola and Pepsi tied at 20. Instinct tells us that a NPS over 0 is good, but how good? The industry average for fast moving consumer goods is 30 (“Pepsi Net Promoter Score 2019 Benchmarks”, 2019). We will let you be the judge.

In the Fall of 2018 we surveyed R users identified by the first commit that added a dependency on either package to a public repository (a repository hosted on GitHub, BitBucket, and other so-called software forges) with some code written in R language. The results of the full survey can be seen here. The responding project contributors were identified as “data.table” users or “tidy” users by their inclusion of the corresponding R libraries in their projects. The survey responses were used to obtain a Net Promoter Score.

Unlike the cola wars, the results of the survey indicate that data.table has an industry average NPS of 28.6, while tidy brings home the gold with a 59.4. What makes tidy users so much more enthusiastic in their choice of data structure library? Tune in for next installment where we try to track down what factors drive a users choice.

References: “Pepsi Net Promoter Score 2019 Benchmarks.” Pepsi Net Promoter Score 2019 Benchmarks | Customer.guru, customer.guru/net-promoter-score/pepsi.